Setting up a personal Mastodon instance

Published

Published

I'll leave it to the Wikipedia article I linked above to explain what Mastodon is and how it works in detail. The TL;DR version is that Mastodon is an open source, decentralized social network. "Decentralized" simply means that there is no single server or system owned by a single corporation or individual; instead, it's a federation of lots of servers or "instances" around the world that allow users on different instances to follow each other and exchange content, just like on Twitter. This way there is no single "owner", and each instance has one or more admins that decide how to moderate content or which other instances to block (for example, some admins may not want their instances to communicate with other instances that spread hate, racism and other no-no topics). There are no ads either, which is a plus.

Most people new to Mastodon just create an account on public instances, and you can find instances focussed on some specific topics.

Personally, I first created an account with the hachyderm.io instance run by Kris Nova, but then I decided that since I was just getting started, I might as well set up my own instance already and be in full control, because why not 😀

This post will describe how I set up my instance from start to end. Some of the choices I have made offer some flexibility, for example you can use another service to send emails or store media if you don't want to use the same services that I use.

Prerequisites

Domain name

So the first step I recommend is to configure Cloudflare with your domain. If you purchase your domain directly with Cloudflare (which is also a registrar) then this is done automatically for you. If not, you can add a site to your Cloudflare account specifying the domain and follow the simple instructions. It takes just a few minutes.

Object storage

If you go with my recommendation and use Wasabi, create an account and a set of access/secret keys, then create a bucket with the same name as the domain name you want to use with the CDN - which is Cloudflare in our case - in a location close to your server (for me Frankfurt since my server is in Nuremberg). So for my botta.social Mastodon instance, I created a bucket named files.botta.social. It is possible to serve files directly from Wasabi, but due to their policy regarding the bandwidth I recommend you use Cloudflare in front of the bucket to cache the assets and save on bandwidth used by Wasabi.

Once you create the bucket, go to the bucket's setting and edit the policy as follows:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"AWS": "*"

},

"Action": "s3:GetObject",

"Resource": "arn:aws:s3:::files.botta.social/*"

}

]

} Of course, change the name of the bucket with yours. This policy makes it possible to serve the files out of the bucket with permanent public URLs, instead of pre-signed URLs that expire after a certain amount of time.

Now go to Cloudflare, and create a CNAME record with the same name as your bucket and pointing to the correct Wasabi endpoint hostname depending on the location/region of the bucket - you can see the list here.

With these changes, your Mastodon assets will be served and cached by Cloudflare under your subdomain, instead of being served by Wasabi.

SMTP relay

Creating the server

If you go with Hetzner, you can follow the instructions below, otherwise it depends on the provider you use. One advantage of using Hetzner Cloud over other providers is that it offers a firewall feature in front of your server, and that's recommended vs configuring a software firewall inside the server because that way malicious traffic is blocked before even reaching your server at all.

First things first, create an account with Hetzner Cloud if you don't have one already. Then create a project from the console with a name like "Mastodon" or whatever you like, and add your public SSH key under Security -> SSH Keys from the sidebar. You will then be able to configure your server so that it already has this key, letting you SSH into the server easily.

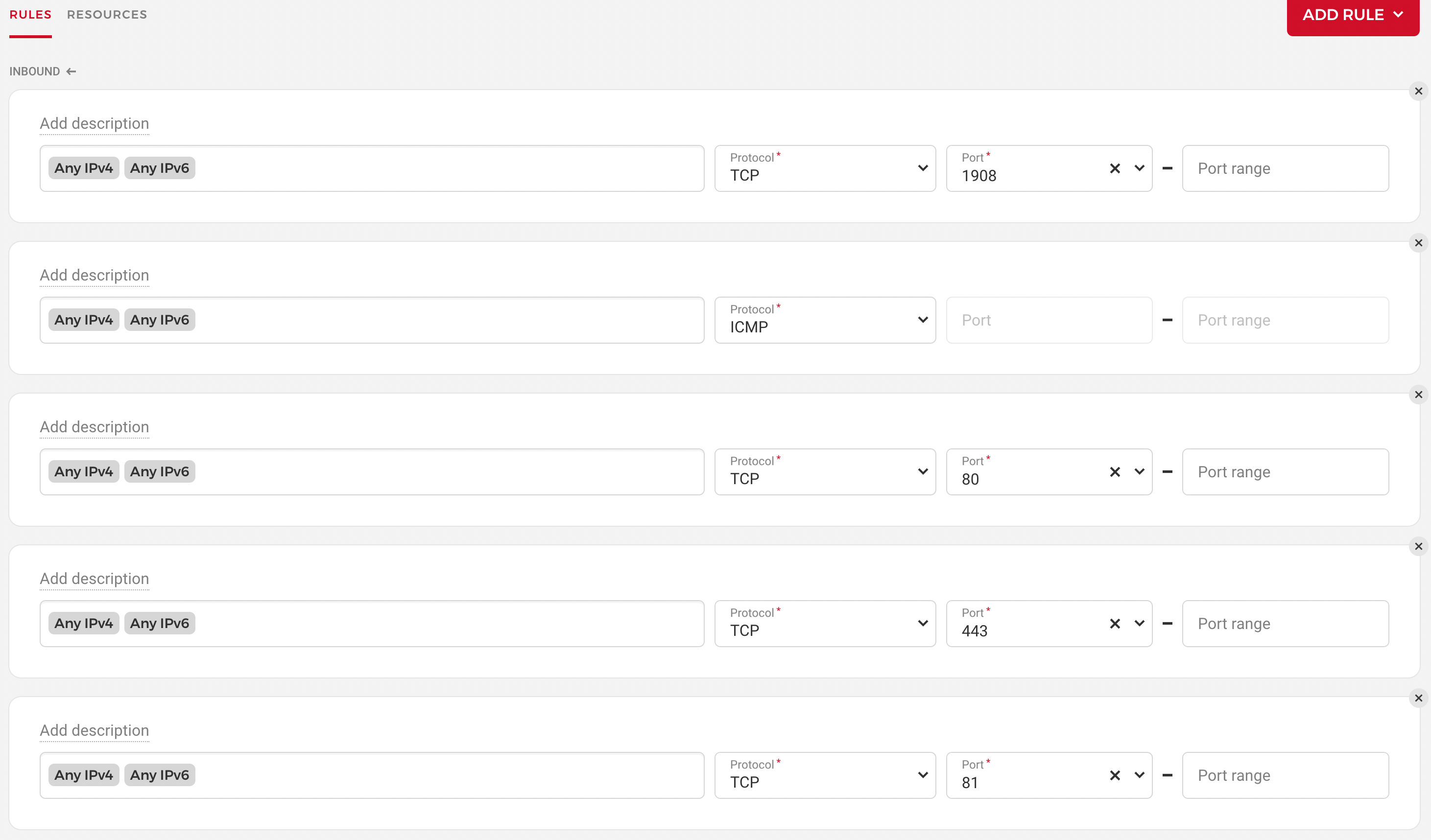

Next, head to Firewalls, and create a new firewall like in the picture:

So you basically want outbound rules that allow traffic from any IP address to ports 1908, 80, 443, 81 and ICMP pings. Ports 80 and 443 are for regular traffic, 81 is for the Nginx Proxy Manager admin panel which we'll set up later, and 1908 is the port I use for SSH. This is one port that you can change with whatever you like, as long as it's different from the default port 22 to block most scripted attacks against SSH.

Now go to Servers, and click on Add Server. Here you can choose the location that you prefer (my instance is in Nuremberg since I am based in Finland, Europe, and Nuremberg has good latency also for US users). For the image I recommend Ubuntu 22.04 if you want to follow my instructions to the letter. For the instance type I recommend an instance with more than 4GB of RAM; I chose the CX31 with 2 cores and 8GB of RAM; instances with shared cores are good enough. The ones with dedicated cores may offer improved performance but they are quite a bit more expensive.

Under Networking you can leave the default settings. Under SSH Keys select the key you added earlier, and under Firewalls selected the firewall you also created earlier. Also I recommend you enable backups. Give a name to the server and click on Create and buy now.

Hetzner is pretty quick at provisioning cloud servers, they are typically ready in 10-20 seconds. Once the server is ready, take note of its public ipv4 address, then go to Cloudflare's DNS settings for your domain, and configure an A record pointing to that IP. Make sure you configure the domain or subdomain you want to use for your Mastodon web app, like botta.social in my case.

Preparing the server

adduser vito usermod -aG sudo vito

In the example above, I have created a user named vito and added it to the sudo group. Of course name your user as you please. I am not entirely sure if this is still needed, but I also added the following line to /etc/sudoers:

vito ALL=(ALL) NOPASSWD:ALL

Maybe it's no longer needed if you add the user to the sudo group so you may just want to double check. It doesn't hurt anyway.

Now you need to copy your public SSH key to this new user:

ssh-copy-id -i ~/.ssh/<private key> vito@<server IP>

Next, edit /etc/ssh/sshd_config and edit as follows:

ClientAliveInterval 300 ClientAliveCountMax 1 PermitRootLogin no PasswordAuthentication no Port 1908 MaxAuthTries 2 AllowTcpForwarding no X11Forwarding no AllowAgentForwarding no AllowUsers vito

For Port you can use a port other than 1908 (which I use) if you prefer; for AllowUsers make sure you specify only the name of the user you created earlier.

Test the config:

sudo sshd -t

If all looks good, restart the SSH server to apply these changes:

sudo systemctl restart sshd

Next, I recommend you install Fail2ban, which can ban IPs originating some bruteforce attacks against SSH. This is not critical if you use a non standard port for SSH and disable password authentication like we did, but it's anyway good to have:

sudo apt install fail2ban

Create a custom config:

sudo cp /etc/fail2ban/jail.conf /etc/fail2ban/jail.local

Then edit /etc/fail2ban/jail.local and change the port in the [ssh] section to the port you have specified earlier. Restart,

sudo systemctl restart fail2ban

And check if it's working with

sudo fail2ban-client status sshd

The next thing we want to do is set up Docker, since we are going to run Mastodon as containers for simplicity.

curl -fsSL https://get.docker.com -o get-docker.sh sudo sh get-docker.sh sudo usermod -aG docker $USER

In order to be able to run Docker commands with your non-root user, log out and log in again, then test with:

docker ps

If you don't get any errors, proceed with creating a network that we'll use to link the Mastodon containers with the Nginx Proxy Manager one:

docker network create apps

Setting up Mastodon

|- mariadb | |- .env | |- docker-compose.yml |- mastodon | |- .env | |- docker-compose.yml |- nginx-proxy-manager | |- .env | |- docker-compose.yml |- start.sh

MariaDB

We are going to use MariaDB for the database used by Nginx Proxy Manager. Edit the mariadb/.envfile as follows:

MARIADB_ROOT_PASSWORD=...

Set a password. You'll need it soon.

Edit mariadb/docker-compose.yml as follows:

version: "3.3"

networks:

default:

name: apps

services:

mariadb:

image: mariadb:10.5

container_name: mariadb

restart: unless-stopped

ports:

- "127.0.0.1:3306:3306"

env_file:

- /home/vito/compose/mariadb/.env

volumes:

- /home/vito/apps/mariadb:/var/lib/mysql Replace the username in the paths with yours.

Mastodon

You can leave mastodon/.env empty for now (it will be populated by a setup command soon); edit the mastodon/docker-compose.yml file as follows:

version: "3.7"

networks:

default:

name: apps

services:

db:

container_name: mastodon-postgres

restart: always

image: postgres:14-alpine

user: root

shm_size: 256mb

healthcheck:

test: ["CMD", "pg_isready", "-U", "postgres"]

volumes:

- /home/vito/apps/mastodon/postgres:/var/lib/postgresql/data

ports:

- 127.0.0.1:5432:5432

environment:

POSTGRES_HOST_AUTH_METHOD: trust

redis:

container_name: mastodon-redis

restart: always

image: redis:7-alpine

user: root

healthcheck:

test: ["CMD", "redis-cli", "ping"]

volumes:

- /home/vito/apps/mastodon/redis:/data

es:

container_name: mastodon-elasticsearch

restart: always

image: docker.elastic.co/elasticsearch/elasticsearch-oss:7.10.2

user: root

environment:

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

- "cluster.name=es-mastodon"

- "discovery.type=single-node"

- "bootstrap.memory_lock=true"

healthcheck:

test: ["CMD-SHELL", "curl --silent --fail localhost:9200/_cluster/health || exit 1"]

volumes:

- /home/vito/apps/mastodon/elasticsearch:/usr/share/elasticsearch/data

ulimits:

memlock:

soft: -1

hard: -1

web:

container_name: mastodon-web

image: tootsuite/mastodon:v3.5.3

user: root

restart: always

command: bash -c "rm -f /mastodon/tmp/pids/server.pid; bundle exec rails s -p 3000"

healthcheck:

test: ["CMD-SHELL", "wget -q --spider --proxy=off localhost:3000/health || exit 1"]

ports:

- "127.0.0.1:3000:3000"

depends_on:

- db

- redis

- es

volumes:

- /home/vito/apps/mastodon/public/system:/mastodon/public/system

env_file:

- /home/vito/compose/mastodon/.env

streaming:

container_name: mastodon-streaming

image: tootsuite/mastodon:v3.5.3

user: root

restart: always

command: node ./streaming

healthcheck:

test: ["CMD-SHELL", "wget -q --spider --proxy=off localhost:4000/api/v1/streaming/health || exit 1"]

ports:

- "127.0.0.1:4000:4000"

depends_on:

- db

- redis

environment:

VIRTUAL_HOST: botta.social

VIRTUAL_PATH: "/api/v1/streaming"

VIRTUAL_PORT: 4000

env_file:

- /home/vito/compose/mastodon/.env

sidekiq:

container_name: mastodon-sidekiq

image: tootsuite/mastodon:v3.5.3

user: root

restart: always

command: bundle exec sidekiq

depends_on:

- db

- redis

volumes:

- /home/vito/apps/mastodon/public/system:/mastodon/public/system

env_file:

- /home/vito/compose/mastodon/.env This compose file describes all the Mastodon components; you need to edit the paths for the env_file setting to replace vito with your username. Same thing for the paths in volumes. For the streaming container also change the VIRTUAL_HOST domain with yours.

Nginx Proxy Manager

Edit nginx-proxy-manager/.env as follows:

DB_MYSQL_HOST="mariadb" DB_MYSQL_PORT=3306 DB_MYSQL_USER="root" DB_MYSQL_PASSWORD="...." DB_MYSQL_NAME="nginx"

Be sure of setting the password to the same one you specified for MariaDB. Next, edit nginx-proxy-manager/docker-compose.yml as follows:

version: "3.3"

networks:

default:

name: apps

services:

nginx:

image: 'jc21/nginx-proxy-manager:latest'

container_name: nginx

restart: unless-stopped

ports:

- '80:80' # Public HTTP Port

- '443:443' # Public HTTPS Port

- '81:81' # Admin Web Port

depends_on:

- mariadb

env_file:

- /home/vito/compose/nginx-proxy-manager/.env

volumes:

- /home/vito/apps/nginx/data:/data

- /home/vito/apps/nginx/letsencrypt:/etc/letsencrypt Again, replace the username in the paths with yours.

start.sh

Edit the script start.sh as follows:

#!/bin/bash docker compose \ -f /home/vito/compose/mariadb/docker-compose.yml \ -f /home/vito/compose/nginx-proxy-manager/docker-compose.yml \ -f /home/vito/compose/mastodon/docker-compose.yml \ up -d --remove-orphans

Making sure you replace again the username with the correct one.

Initial setup

Copy the files to your home directory, and run

compose/start.sh

This will pull the images and create all the containers, but most of them won't start correctly yet.

Install the PostgresSQL client:

sudo sh -c 'echo "deb http://apt.postgresql.org/pub/repos/apt $(lsb_release -cs)-pgdg main" > /etc/apt/sources.list.d/pgdg.list' wget --quiet -O - https://www.postgresql.org/media/keys/ACCC4CF8.asc | sudo apt-key add - sudo apt -y update sudo apt -y install postgresql-14 sudo systemctl stop postgresql sudo systemctl disable postgresql

Then start a psql shell with the postgres container:

psql -h 127.0.0.1 -P 5432 -U postgres

and create the mastodon database:

CREATE DATABASE mastodon;

Then press ctrl-D to exit the shell.

Run the following command to generate the Mastodon configuration:

docker compose -f compose/mastodon/docker-compose.yml \ run --rm -v /home/vito/compose/mastodon/.env:/opt/mastodon/.env.production \ -e RUBYOPT=-W0 web bundle exec rake mastodon:setup

This command will ask you a number of questions including which domain you want to use, the name of the database, your object storage and email delivery settings and more; don't rush, accept the defaults when you don't have an answer for things I haven't covered, and make sure you read them and answer them properly according to the information you should have by now. You will also be asked if you want to create a single-user instance; I recommend you answer no and disable signups later, for flexibility. You will also be able to create your first account, the admin account. Make sure you say yes to saving the configuration, and this will fill in the file mastodon/.env with this configuration. Then append the following lines to enable full text search with Elastic Search:

ES_ENABLED=true ES_HOST=mastodon-elasticsearch ES_PORT=9200

Now restart the containers:

docker compose \ -f /home/vito/compose/mariadb/docker-compose.yml \ -f /home/vito/compose/nginx-proxy-manager/docker-compose.yml \ -f /home/vito/compose/mastodon/docker-compose.yml \ restart

And check the logs for each container, ignoring Nginx for now. You can see which containers are running with the command

docker ps

and then check the logs for a container with the command

docker logs -f mastodon-web # or name of other container

At this point, all the Mastodon containers should start correctly. If you run into issues with this, let me know in the comments.

To create the indices for Elastic Search, first exec into the web container:

docker exec -it mastodon-web bash

Then from inside the container run:

RAILS_ENV=production bin/tootctl search deploy

Setting up Nginx

First, install the MariaDB client:

sudo apt install mariadb-client

Then open a shell:

mysql -uroot -p<your mariadb password> -h 127.0.0.1

and create a database for Nginx:

CREATE DATABASE nginx;

Now restart the Nginx container:

docker restart nginx

Check the logs and once it's started, open the URL http://<server IP>:81. This will show you the Nginx Proxy Manager admin panel. Log in with username: [email protected] and password: changeme, then be sure to change username and password right away.

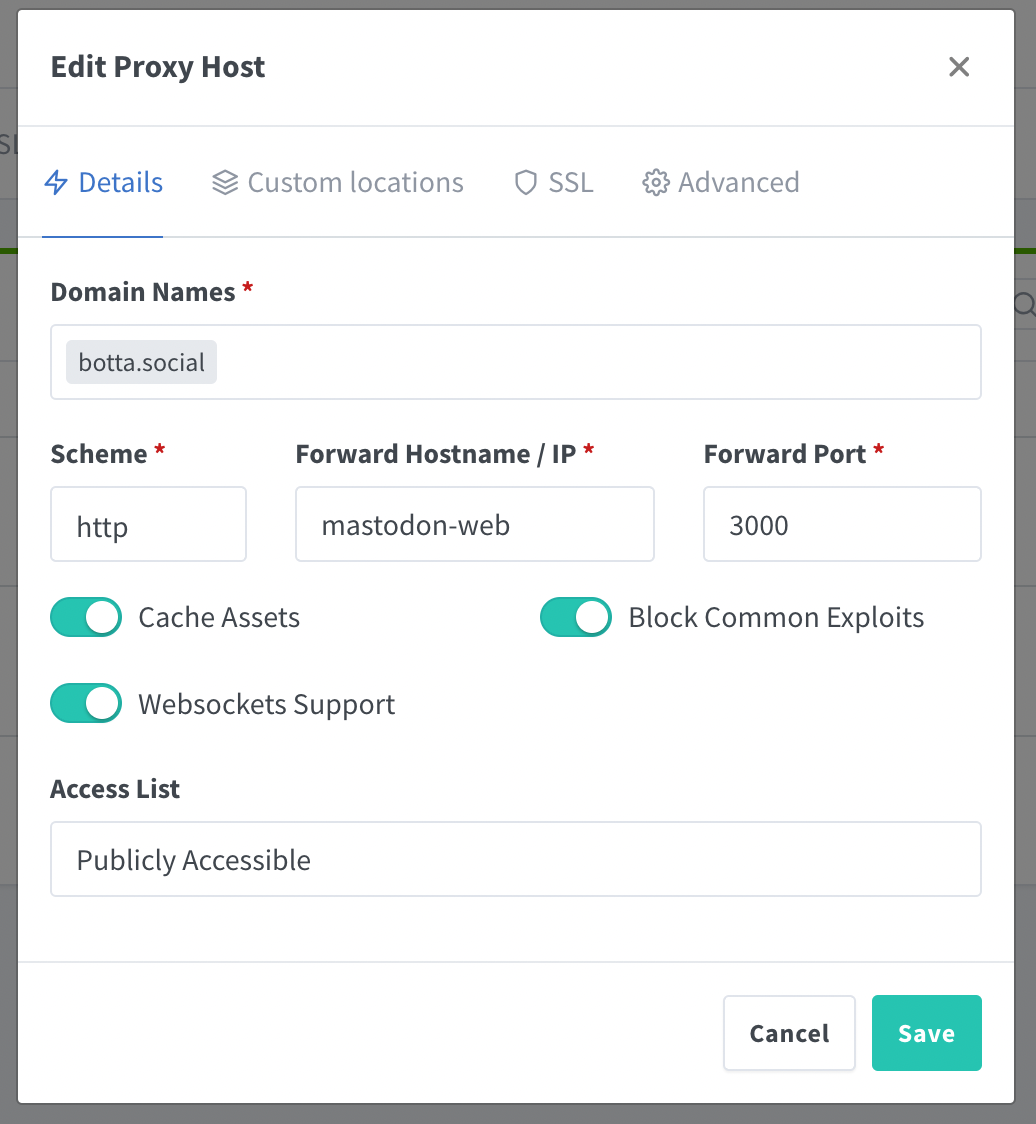

Now go to Proxy Hosts, and add a new host:

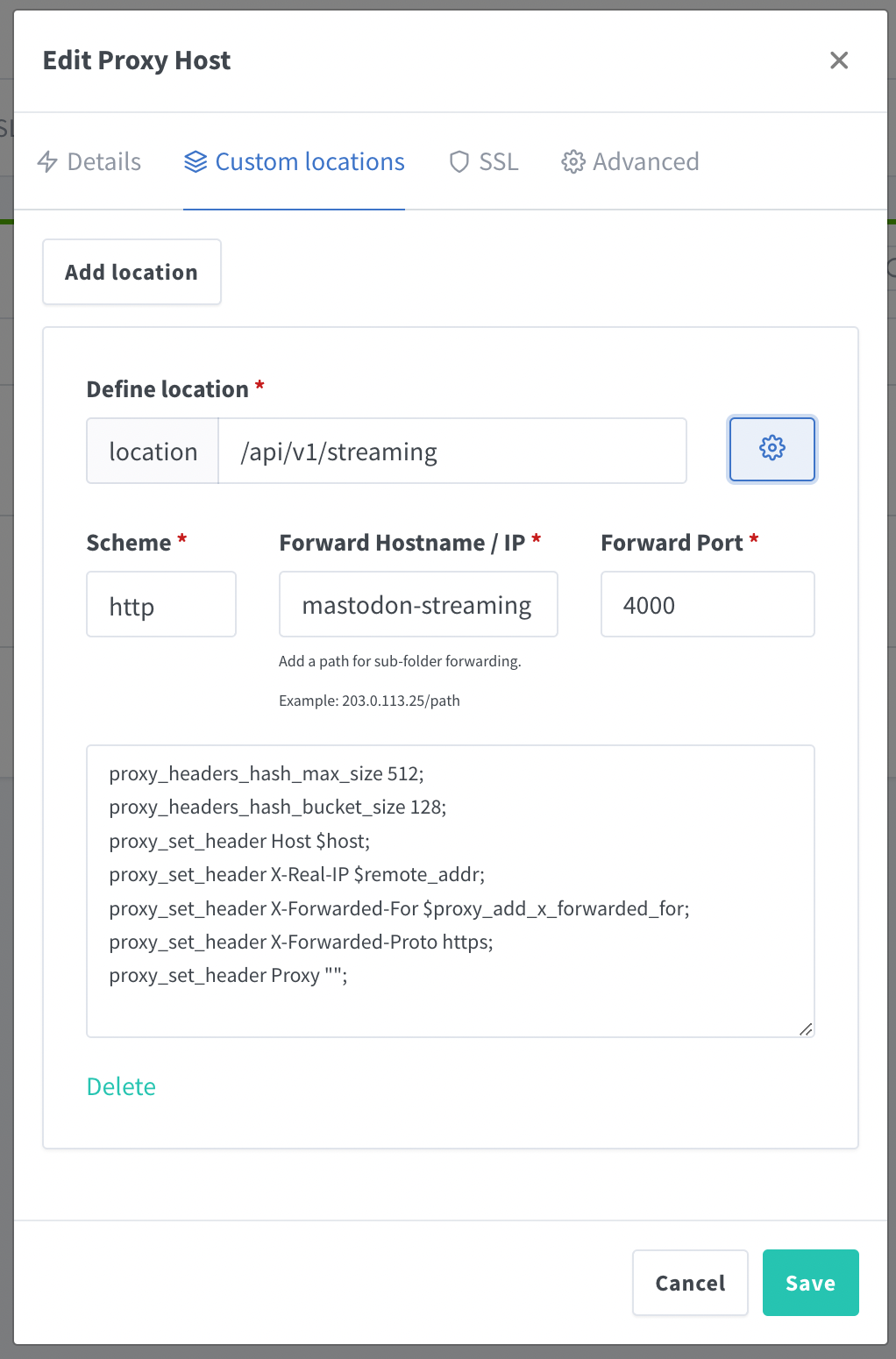

Of course, use your own domain instead of botta.social. Before you save, go to Custom locations and add the location for the streaming as in the picture:

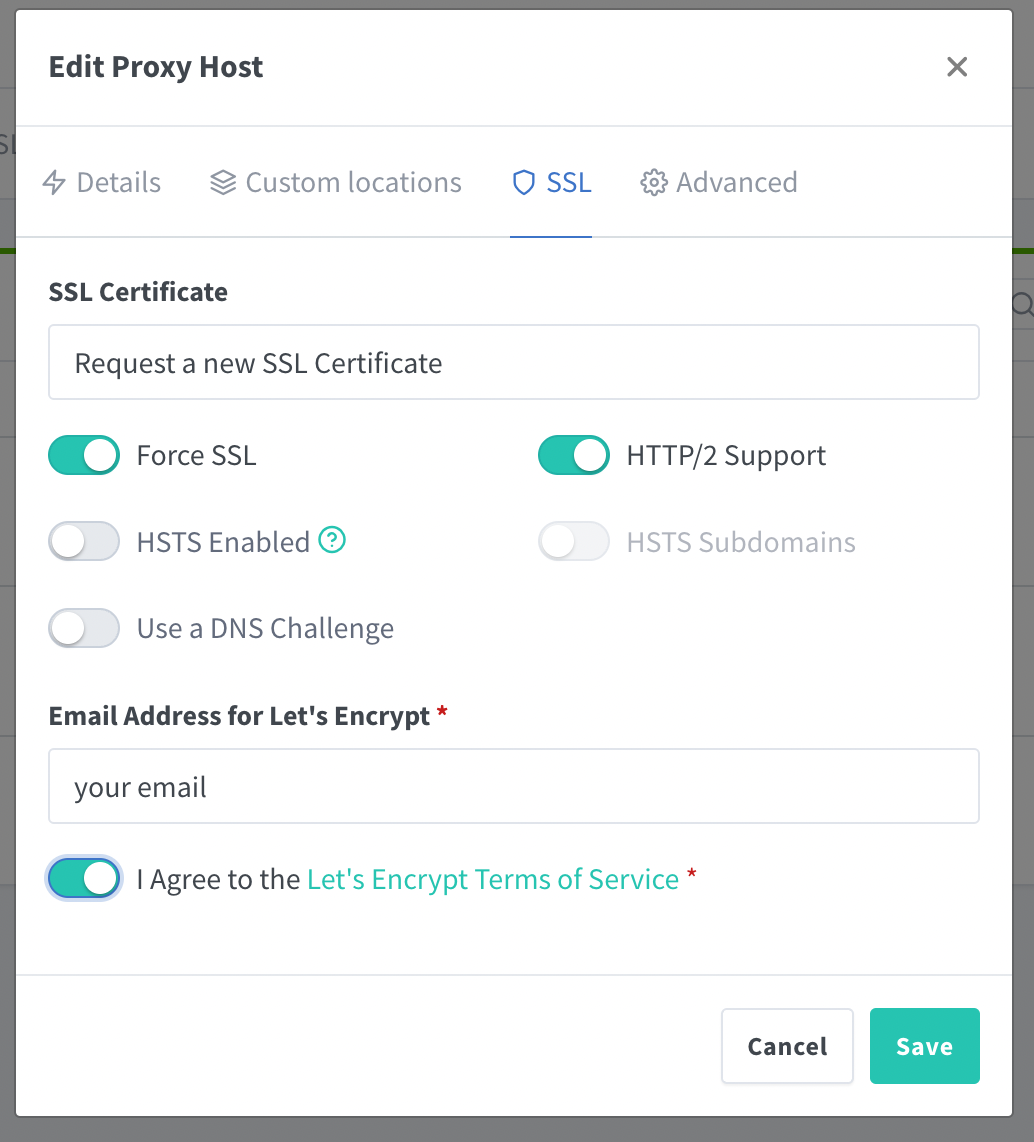

Under SSL, configure as in the picture:

Specify your email address for Let's Encrypt notifications (Nginx Proxy Manager uses Let's Encrypt to provision free SSL certificates), then go to Advanced and enter the following in the textarea:

proxy_set_header X-Forwarded-forward_scheme $scheme; proxy_set_header X-Forwarded-Proto $forward_scheme; real_ip_header CF-Connecting-IP;

You can now save. It will take a few moments because it will provision the certificate. Once it's ready, you will be able to log in to your Mastodon instance at your custom domain. Yay!!!

The setup at this point is basically complete, but there is one more thing we need to cover.

Backups

For these backups you can use any tool, but one relatively new tool I like is Kopia, which is like an improved version of the popular Restic. Kopia works well with S3 compatible storage, and we are already using Wasabi (or whichever other service you decided to use instead of it), so we can just use a bucket there.

So head to Wasabi, and create a bucket with a unique name specifically for backups. Then install Kopia on the server:

curl -s https://kopia.io/signing-key | sudo gpg --dearmor -o /usr/share/keyrings/kopia-keyring.gpg echo "deb [signed-by=/usr/share/keyrings/kopia-keyring.gpg] http://packages.kopia.io/apt/ stable main" | sudo tee /etc/apt/sources.list.d/kopia.list sudo apt update sudo apt install kopia

export KOPIA_BACKUP_PROVIDER=wasabi export KOPIA_CONFIG_FILE=/home/vito/.config/kopia/repository-$KOPIA_BACKUP_PROVIDER.config sudo -E kopia repository create s3 \ --bucket=... \ --access-key=... \ --secret-access-key=... \ --region=eu-central-2 \ --endpoint=s3.eu-central-2.wasabisys.com

The command above will prepare the Kopia repository. Then create the file ~/.kopiaignore

/snap /apps/nginx/data/logs /.cache /.docker /apps/mastodon/postgres

And perform the first backup:

sudo -E kopia repository connect s3 \ --bucket=... \ --access-key=... \ --secret-access-key=.... \ --region=eu-central-2 \ --endpoint=s3.eu-central-2.wasabisys.com \ --config-file=$KOPIA_CONFIG_FILE \ --password=...

sudo -E kopia snapshot create --config-file $KOPIA_CONFIG_FILE /home/vito

We also want to back up the MariaDB database for Nginx and the Postgres one for Mastodon, as well as automate the full backup. For this create a directory named ~/scripts, and a file backup-mariadb in it with the content below:

#!/bin/bash

set -e

BACKUP_DATE=`date +%Y/%m/%d`

BACKUP_TIME=`date +%H-%M-%S`

NUMBER_OF_DAYS=7

BACKUP_DIR="/home/vito/backups/mariadb"

BY_DATE_DIR="$BACKUP_DIR/by-date/"

LATEST_DIR="$BACKUP_DIR/latest/"

DUMP_DIR="$BY_DATE_DIR$BACKUP_DATE"

DATABASES=(nginx)

mkdir -p $LATEST_DIR

mkdir -p $DUMP_DIR

for DATABASE in ${DATABASES[@]}; do

SQL="$DATABASE-$BACKUP_TIME.sql"

DUMP_PATH="$DUMP_DIR/$SQL"

echo Dumping $DATABASE to $DUMP_PATH...

mysqldump -uroot -p<mariadb password> -h 127.0.0.1 --opt $DATABASE > $DUMP_PATH

gzip $DUMP_PATH

ln -fs $DUMP_PATH.gz $LATEST_DIR$DATABASE.sql.gz

done

find $BY_DATE_DIR/*/*/* -type d -mtime +7 -exec rm -rf {} \;

find $LATEST_DIR/ -type f -mtime +7 -exec rm -f {} \;

Change the MariaDB password and make the script executable with

chmod +x scripts/backup-mariadb

Next, create the file ~/scripts/backup-postgres:

#!/bin/bash

set -e

BACKUP_DATE=`date +%Y/%m/%d`

BACKUP_TIME=`date +%H-%M-%S`

NUMBER_OF_DAYS=7

BACKUP_DIR="/home/vito/backups/postgres"

BY_DATE_DIR="$BACKUP_DIR/by-date/"

LATEST_DIR="$BACKUP_DIR/latest/"

DUMP_DIR="$BY_DATE_DIR$BACKUP_DATE"

DATABASES=(mastodon)

mkdir -p $LATEST_DIR

mkdir -p $DUMP_DIR

for DATABASE in ${DATABASES[@]}; do

SQL="$DATABASE-$BACKUP_TIME.sql"

DUMP_PATH="$DUMP_DIR/$SQL"

echo Dumping $DATABASE to $DUMP_PATH...

pg_dump --clean -h 127.0.0.1 -p 5432 -U postgres $DATABASE > $DUMP_PATH

gzip $DUMP_PATH

ln -fs $DUMP_PATH.gz $LATEST_DIR$DATABASE.sql.gz

done

find $BY_DATE_DIR/*/*/* -type d -mtime +7 -exec rm -rf {} \;

find $LATEST_DIR/ -type f -mtime +7 -exec rm -f {} \; And make it executable:

chmod +x scripts/backup-postgres

Then create ~/scripts/backup-wasabi for the files backup:

#!/bin/bash

[ "${FLOCKER}" != "$0" ] && exec env FLOCKER="$0" flock -en "$0" "$0" "$@" || :

set -e

export KOPIA_CHECK_FOR_UPDATES=false

sudo -E kopia repository connect s3 \

--bucket=<wasabi bucket for backups> \

--access-key=... \

--secret-access-key=.... \

--region=<wasabi backup bucket region> \

--endpoint=<wasabi backup bucket endpoint> \

--config-file=/home/vito/.config/kopia/repository-wasabi.config \

--password=<backup password>

sudo -E kopia snapshot create --config-file /home/vito/.config/kopia/repository-wasabi.config /home/vito

sudo -E kopia repository disconnect --config-file /home/vito/.config/kopia/repository-wasabi.config Make it executable:

chmod +x scripts/backup-wasabi

Then create the file ~/scripts/backup:

#!/bin/bash

[ "${FLOCKER}" != "$0" ] && exec env FLOCKER="$0" flock -en "$0" "$0" "$@" || :

date

set -e

echo "Backing up mariadb databases..."

/home/vito/scripts/backup-mariadb

echo

echo

echo "Backing up postgres databases..."

/home/vito/scripts/backup-postgres

echo

echo

echo "Backing up apps to Wasabi..."

/home/vito/scripts/backup-wasabi

echo

echo

echo "...Backup complete." And make it executable:

chmod +x scripts/backup

Run it a first time:

scripts/backup

This should dump the databases in the ~/backup directory (with a 7 days retention), and then create a snapshot with Kopia.

Finally, schedule the backups with crontab:

0 * * * * /home/vito/scripts/backup

In the example above, I am backing up my instance every hour.

Wrapping up

While closing, I'd like to mention a few useful sites that could help you find communities and people to follow:

Social search

https://search.noc.social/

Fedi.directory

https://fedi.directory/

Trunk

https://communitywiki.org/trunk

Fediverse.info

https://fediverse.info/explore/people

There is also a tool that can automatically crosspost your tweets and "toots" (as they are called in Mastodon) between Twitter and Mastodon, if you haven't decided to leave Twitter yet:

Mastodon Twitter Crossposter:

https://crossposter.masto.donte.com.br/

I am passionate about WebDev, DevOps and CyberSecurity. I am based in Espoo, Finland, where I work with the backend team at

I am passionate about WebDev, DevOps and CyberSecurity. I am based in Espoo, Finland, where I work with the backend team at